‘No More Marking’ is a freely available online tool that helps judges (e.g. teachers) to apply judgment to the best possible effect, using a technique called ‘Comparative Judgement’. Neil Jones describes its use in a project undertaken by the Bell Foundation to develop scales of language proficiency to support teachers of EAL (English as an Additional Language).

Comparative Judgment (CJ)

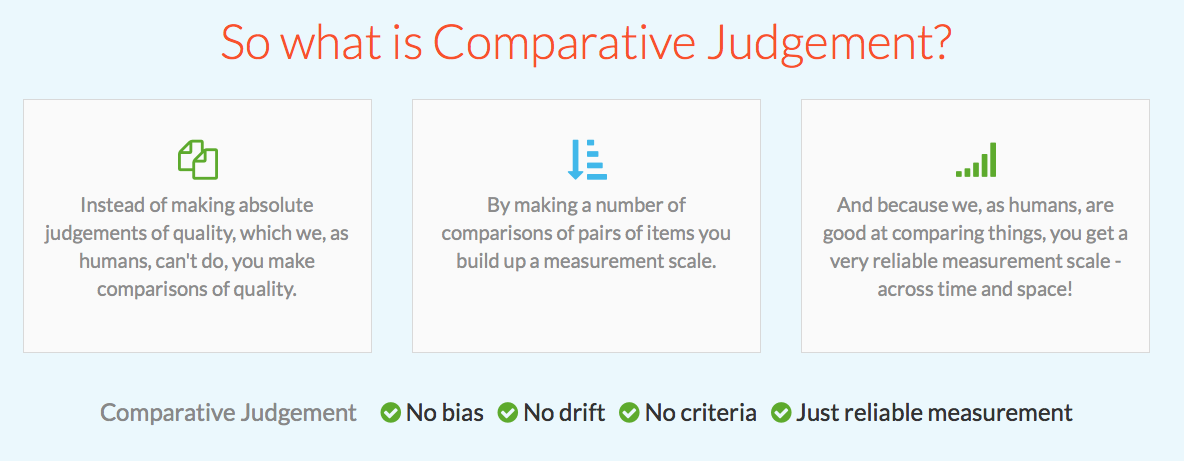

Comparative Judgement (CJ) is about making relative, rather than absolute judgements. In the context of this study the objects of comparison were descriptors sampled from the range of existing descriptive scales. The judgement concerns which description of a student’s performance indicates a higher proficiency level compared with another. It is a very flexible approach: although the individual judgements are very easy to make, we can combine a large number of them to produce a very accurate scale. The more judgements that contribute to this ordering, of course, the more precise the outcomes will be.

Comparative Judgement provides simple ways of bringing psychometric procedures to bear on organising and standardising human judgment, in order to play to its strengths. That is, by making relative rather than absolute decisions.

No More Marking

The principles of CJ have long been understood but there seems to be a new interest in exploiting CJ to address language assessment issues. The No More Marking (NMM) website is the brainchild of Dr. Chris Wheadon and, as the name indicates, it sets out to replace traditional approaches to marking exams by using CJ. In this project we used it to compare the ‘descriptors’ used by different assessment systems.

Setting up the CJ study

The study required a large amount of preparation. The first task was to construct a spreadsheet, each row of which constituted a single descriptor. The columns of the spreadsheet captured the essential detail concerning each descriptor: its source, age focus, level, skill, stage, and so on, as well as the text of the descriptor itself as a separate PDF file.

Then the descriptor texts (the samples that the judges would assess) each had to be uploaded into the NMM platform. We analysed over 1,500 descriptors and organized them into a number of categories – most importantly grouping them by skill (e.g. Reading, Writing, Listening and Speaking), as it was important to compare like with like.

We contacted a large number of people to act as judges, especially practicing language teachers. This range also helped us to pick out any errors in the categorisations and fine-tune the database that the judgements would be made on.

The judging process

When they begin the process, each judge sees a pair of descriptors (example sentences). Their task is simple: to click against the descriptor which is the more difficult or advanced. They are presented with many such pairs, but each one is based on a simple decision (and each descriptor is very short). Descriptors may appear several times, and each pairing adds to the reliability of the measure. Judges had a fixed allocation of judgments to make for each category, and most judges completed more than one category.

Analysing the data

The data downloaded from the NMM platform captures:

- the difficulty estimates of the descriptors, with a range of quality indices,

- the performance of judges, again with quality indices,

- the raw data – a list of every interaction between two descriptors.

These tables are provided for each skill category defined. The NMM platform thus does the majority of the data processing, but also enables the researcher to do further work on the downloaded data – in this case, to regroup the categories by skill and recalibrate them.

Some findings from CJ data: A global scale

The study reveals a clear overall picture and some interesting patterns. For example:

- The CEFR levels cover a wide range: A1 is a beginner level, C2 is very advanced (more advanced than any other scale);

- The Welsh 5-stage system, which informs the DfE’s new ‘EAL Stages of Proficiency’, also covers a wide range, confirming the difference between Stage A (new to English) and Stage E (Fluent)

- The two suites of levels (ACARA and NASSEA) demonstrate a similar pattern, with each level in the suite overlapping but extending the level below.

It is encouraging that the NMM data thus appear to confirm what are doubtless carefully designed features of these suites.

Discussion

Comparative judgment is potentially a powerful tool which can provide insights when other approaches are not applicable. Like any tool, it needs careful use: sufficient raters must be recruited to achieve the degree of reliability required; some training is important, to ensure that raters are using the same set of criteria. The quality of the data (e.g. the number of judgments offered for a descriptor) must be verified.

Comparative judgment can replicate scales quite accurately, but I believe this is an area which still deserves closer study. The use of CJ to construct a measurement scale where no scale previously existed is straightforward (and valuable); but where the aim is to relate to an existing scale then one should not take for granted that the two scales will exhibit a perfectly linear relationship.

I believe that Comparative Judgment has considerable promise. Its use for marking should lead to demonstrably more equitable procedures, as the No More Marking site intends.

Another promising possibility is to use CJ for actually grading and calibrating test items. In most parts of Europe at least, most educational assessment does not make use of strong psychometric models based on Item Response Theory, so that although governments may insist on the importance of ‘standards’, there are only weak mechanisms in place for determining whether standards are rising, falling or staying much the same. One reason for neglecting the strong psychometric technologies which offer a grasp on standards is that they involve pretesting, in order to determine the difficulty of tasks, and pretesting is widely seen as impossible for reasons of test security. Thus a valuable use of CJ in assessment might be to provide data for an approach with all the strengths of IRT but without pretesting – for example, by enabling this year’s tasks to be equated to last year’s tasks securely, by a panel of judges. No More Pretesting?

Neil’s post is based on an longer article he wrote for the Cambridge Research Notes series, which is available to download for free here. The No More Marking website is here.